Taiwan AI Basic Act sets national strategy and safety rules

TL;DR

Taiwan’s Legislative Yuan passed the Artificial Intelligence Basic Act on Dec. 23, 2025, and local media reported the law on Dec. 24. The act designates the National Science and Technology Council as the central AI authority, sets seven core principles for AI governance, and orders the creation of a national AI strategy committee under the Executive Yuan.

About this summary

This article aggregates reporting from 4 news sources. The TL;DR is AI-generated from original reporting. Race to AGI's analysis provides editorial context on implications for AGI development.

Race to AGI Analysis

Taiwan’s Artificial Intelligence Basic Act is a classic example of a jurisdiction trying to get ahead of AI risks without throttling innovation. By elevating AI to its own basic law and naming the National Science and Technology Council as the lead authority, Taiwan is signaling that AI is now critical national infrastructure, on par with energy or finance. The law’s seven principles—covering safety, privacy, explainability, fairness and accountability—are broadly aligned with the EU AI Act and OECD frameworks, which helps Taiwanese firms plug into global markets without constantly second-guessing compliance. ([taipeitimes.com](https://www.taipeitimes.com/News/front/archives/2025/12/24/2003849407))

For the race to AGI, the signal is less about immediate technical progress and more about institutional readiness. This framework gives Taiwanese chipmakers, cloud providers and model builders a clearer regulatory runway, but it also bakes in worker protections and data governance expectations that could constrain some business models. Over time, the mandated national AI strategy committee could become a powerful coordination node, steering public R&D funding, compute access and sandbox programs in ways that either sharpen Taiwan’s position as a trusted AI hub—or slow it if the rules ossify.

Competitively, this moves Taiwan closer to the EU/Korea “rules-plus-industry” camp rather than the lightly regulated US approach, which may appeal to multinational partners looking for stable, rights-respecting environments to site AI workloads.

Who Should Care

Related News

French administrative courts adopt rules on AI use in decisions

Today

Shanghai registers 9 new generative AI services, total hits 139

Today

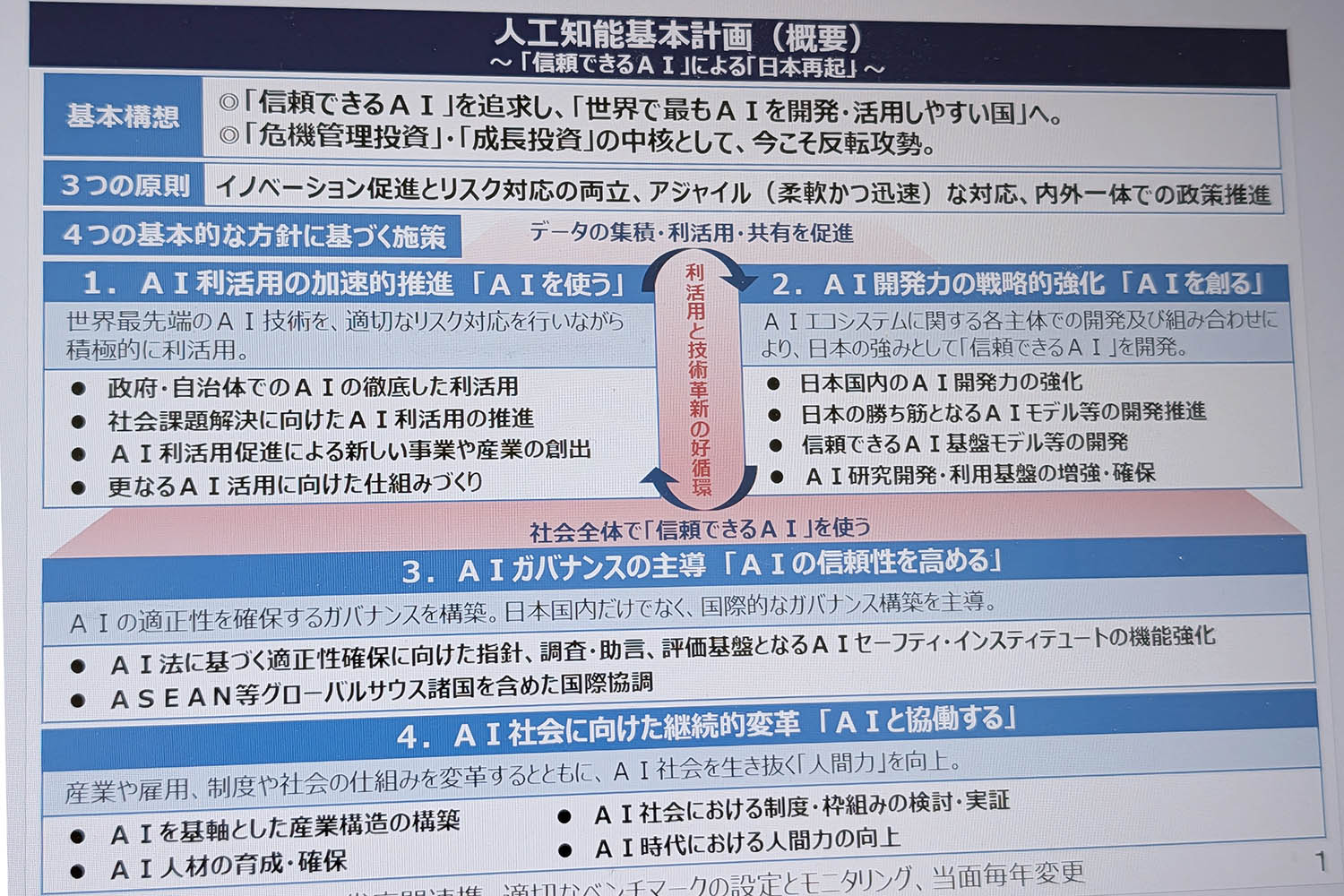

Japan AI Basic Plan aims to be easiest place to build AI

Today