French administrative courts adopt rules on AI use in decisions

TL;DR

Chile-based Diario Constitucional reported on December 24, 2025, that France’s administrative courts have adopted a regulation governing how artificial intelligence can be used in their functions. The instrument aims to balance technological modernization of judicial services with preservation of procedural guarantees and fundamental rights.

About this summary

This article aggregates reporting from 1 news source. The TL;DR is AI-generated from original reporting. Race to AGI's analysis provides editorial context on implications for AGI development.

Race to AGI Analysis

While we don’t yet have the full text, the reported move by France’s administrative courts to formalize how AI can be used in judicial functions fits a broader pattern: high-stakes institutions are quietly codifying guardrails before AI tools become default infrastructure. The framing—modernization of services balanced with guarantees and rights—mirrors the logic of the EU AI Act but translates it into the internal rules of a specific branch of government. That matters because courts are both users and interpreters of AI law: how they regulate their own use of AI will shape how they judge everyone else’s. ([diarioconstitucional.cl](https://www.diarioconstitucional.cl/2025/12/24/tribunales-administrativos-de-francia-adoptan-reglamento-que-regula-uso-de-la-inteligencia-artificial-en-sus-funciones/?utm_source=openai))

For the AGI race, court systems adopting internal AI rules is less about constraining today’s tools and more about building precedent for handling future, more capable agents. Questions like whether judges can rely on AI-generated drafts, how to disclose that use to litigants, and what standard of review applies to AI-assisted fact-finding will all become sharper as models improve. By moving early, French administrative courts may create a template that other jurisdictions adapt, especially in Continental Europe.

For AI developers and deployers, this is a reminder that “AI in government” isn’t just chatbots and document triage; it will eventually touch adjudication, licensing and enforcement. Being prepared with auditable logs, controllable models and clear human-in-the-loop patterns will be table stakes if you want your systems anywhere near the justice pipeline.

Who Should Care

Related News

Shanghai registers 9 new generative AI services, total hits 139

Today

Taiwan AI Basic Act sets national strategy and safety rules

Today

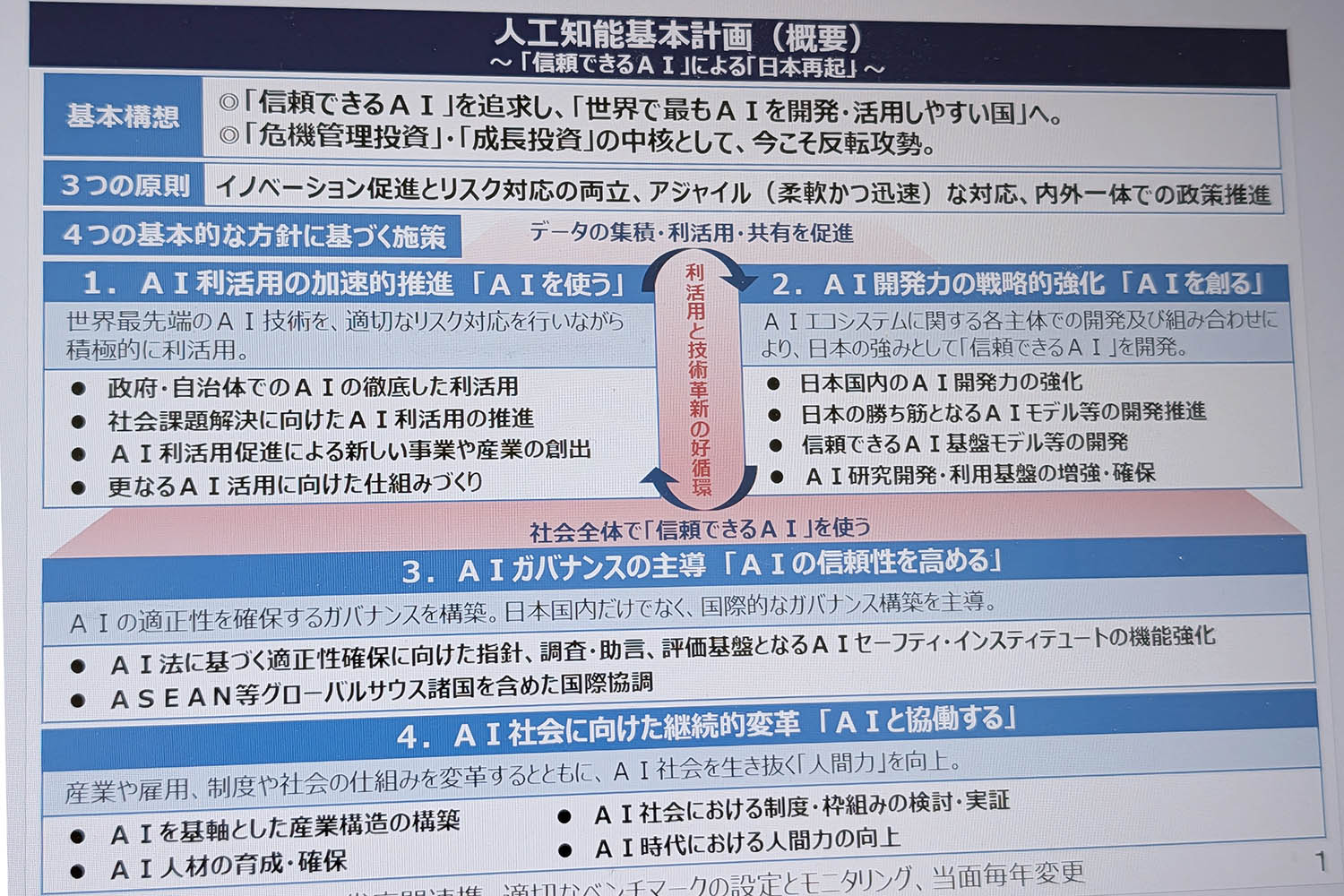

Japan AI Basic Plan aims to be easiest place to build AI

Today