This trend is no longer active

This trend was archived on Jan 14, 2026 as it is no longer seeing new developments.

Global AI Infrastructure Fragmentation Intensifies

Main Take

Cerebras’ IPO reflects growing demand for specialized AI chips amid a competitive landscape dominated by Nvidia. Meanwhile, Nvidia's acquisitions and model releases highlight its strategy to integrate hardware and software for a seamless AI experience. This consolidation points to an escalating arms race where control over AI infrastructure becomes a critical differentiator.

Who Should Care

Cerebras’ IPO could signal a lucrative opportunity in the AI hardware space.

Nvidia's open-source models may accelerate collaborative AI research.

Expect more optimized tools for building and deploying AI solutions.

Related Articles (10)

ByteDance plans $14bn Nvidia chip buy for 2026 AI expansion

Reuters reports that ByteDance plans to spend about 100 billion yuan (~$14.3 billion) on Nvidia AI chips in 2026, up from roughly 85 billion yuan in 2025, if U.S. export rules allow H200 GPU sales to China. Chinese media say the chip budget is part of a broader 160 billion yuan ($23 billion) AI capex plan covering processors and infrastructure for large‑scale model training.([reuters.com](https://www.reuters.com/world/asia-pacific/bytedance-spend-about-14-billion-nvidia-chips-2026-scmp-reports-2025-12-31/?utm_source=openai))

Cerebras plans US IPO to fund next wave of AI chips

AI chip maker Cerebras Systems is preparing to file for a U.S. IPO as soon as next week, targeting a second‑quarter 2026 listing after withdrawing an earlier attempt. The company, valued at about $8 billion after raising over $1 billion this year, develops wafer‑scale processors for large AI models and competes with Nvidia.([reuters.com](https://www.reuters.com/business/ai-chip-firm-cerebras-set-file-us-ipo-after-delay-sources-say-2025-12-19/))

Blackstone CEO rejects AI data center ‘bubble’ narrative

In an interview reported on December 19, 2025 by UAE daily Al Khaleej, Blackstone CEO Stephen Schwarzman said AI data center investments do not constitute a financial bubble. He argued that Blackstone’s data-center business is built on long-term leases with financially strong clients like NVIDIA.

Nvidia and SK hynix use AI physics to speed chip design

On December 17, 2025, NVIDIA detailed how SK hynix is using its PhysicsNeMo framework to build AI surrogate models that accelerate semiconductor TCAD simulations. The collaboration uses graph neural network–based models to cut process and device simulation times from hours to milliseconds, enabling far faster exploration of chip manufacturing recipes.

Nvidia acquires SchedMD and unveils Nemotron 3 open models

Nvidia has quietly deepened its software and model ambitions by acquiring SchedMD, the company behind the Slurm workload manager, while at the same time rolling out its Nemotron 3 family of open multimodal models for agentic AI. The SchedMD deal keeps Slurm open source and vendor-neutral but effectively brings a critical piece of AI cluster infrastructure under Nvidia’s wing, reinforcing how central its stack has become to training and serving large models at scale. On the model side, Nemotron 3 introduces Nano, Super and Ultra variants using a hybrid mixture-of-experts architecture, with Nvidia claiming up to 4x higher token throughput and 60% lower reasoning token generation costs versus its previous generation, plus a 1M token context window optimized for multi-agent workflows. The company is releasing not just weights but also massive pretraining, post‑training and RL datasets, along with NeMo Gym and NeMo RL libraries, positioning Nemotron as an “open but enterprise-ready” counterweight to both frontier proprietary models and the wave of Chinese open-weight competitors. Strategically, this move cements Nvidia as not just the dominant chip supplier but a major model and tooling provider in the emerging agent ecosystem, while still leaning heavily on openness to keep demand flowing to its hardware.

Synvo AI and SBG partner on secure enterprise AI in Indonesia

Singapore-based Synvo AI, a deep-tech spin-out from NTU’s MMLab, has signed a strategic partnership with Indonesia’s Sobat Bisnis Group (SBG) to commercialize context-aware enterprise AI across Indonesia and Southeast Asia. The alliance centers on Synvo’s Contextual Memory Engine, which promises long-term memory, multimodal context and privacy-preserving deployment across cloud, private cloud and fully offline environments—features designed for regulated industries and data-sensitive workloads. SBG, which has close ties to Indonesia’s Mayapada Group, will integrate Synvo’s stack into solutions for advanced document intelligence, cross-system workflow optimization and hybrid AI systems that preserve visual-language-model context while maintaining task-level accuracy. The partners have formalized their collaboration via an MoU and pitch it as a way to close the gap between cutting-edge multimodal research and real-world enterprise rollouts, with SBG providing distribution and integration muscle on the ground. If they execute, this could become a reference deployment model for regional players that want sophisticated, memory-rich AI agents but need strong guarantees around data sovereignty and on‑premise options.

Nvidia acquires SchedMD to tighten grip on AI data‑center software stack

Nvidia has acquired SchedMD, the company behind the open‑source Slurm workload scheduler used to manage large high‑performance computing and AI clusters. Financial terms were not disclosed, but Nvidia said it will continue distributing Slurm as open source while selling enterprise support, effectively pulling a critical piece of data‑center orchestration into its own portfolio. The deal underscores how much of Nvidia’s AI power comes not just from GPUs but from the surrounding software—CUDA, libraries, and now core scheduling infrastructure—that locks customers into its ecosystem. By owning Slurm, Nvidia can better optimize large training and inference jobs for its hardware, while making it harder for rival chip vendors to compete on equal footing in complex multi‑GPU environments. For AI customers, the upside is potentially smoother scaling and support, but it also concentrates even more leverage in Nvidia’s hands at a time when regulators and hyperscalers are already wary of its dominance.

Nvidia unveils Nemotron 3 open‑source AI models as Chinese competition heats up

Nvidia has launched a new family of open‑source large language models, Nemotron 3, that it says are faster and cheaper to run than its previous offerings while handling longer, multi‑step tasks. The smallest model, Nemotron 3 Nano, is being released immediately with larger versions due in the first half of 2026, signaling Nvidia’s intent to deepen its role not just in AI hardware but in the model ecosystem itself. While Nvidia is best known for supplying chips to closed‑source players like OpenAI, it has been quietly building a catalog of open models that partners such as Palantir are weaving into their own products. The move comes as open‑source models from Chinese labs proliferate, raising competitive pressure on US incumbents and making it harder for Nvidia to simply rely on its hardware moat. Strategically, Nemotron 3 is Nvidia’s bid to stay central to the AI stack even if developers gravitate toward open ecosystems rather than proprietary frontier models.

GIBO partners with Malaysia’s E Total to build NVIDIA‑powered AI compute centers

GIBO Holdings has announced a strategic collaboration with Malaysia‑based E Total Technology to plan and deploy a network of AI compute centers in Malaysia built around NVIDIA’s latest high‑performance GPU architectures. Under the framework, E Total will lead local execution—site selection, technical and commercial feasibility studies, regulatory coordination and data‑center infrastructure planning—while both parties evaluate future capacity expansions. The facilities are expected to support dense AI training and inference workloads for enterprises, research institutions and digital‑economy players, strengthening Malaysia’s aspiration to become a regional AI compute hub. Although financial terms weren’t disclosed, the move signals continued fragmentation of global AI infrastructure build‑out as mid‑tier players and emerging markets race to secure scarce top‑end NVIDIA capacity rather than relying solely on U.S. hyperscalers.([prnewswire.com](https://www.prnewswire.com/news-releases/gibo-announces-strategic-collaboration-with-e-total-technology-sdn-bhd-to-accelerate-deployment-of-ai-compute-centers-featuring-nvidias-most-advanced-chips-302642156.html))

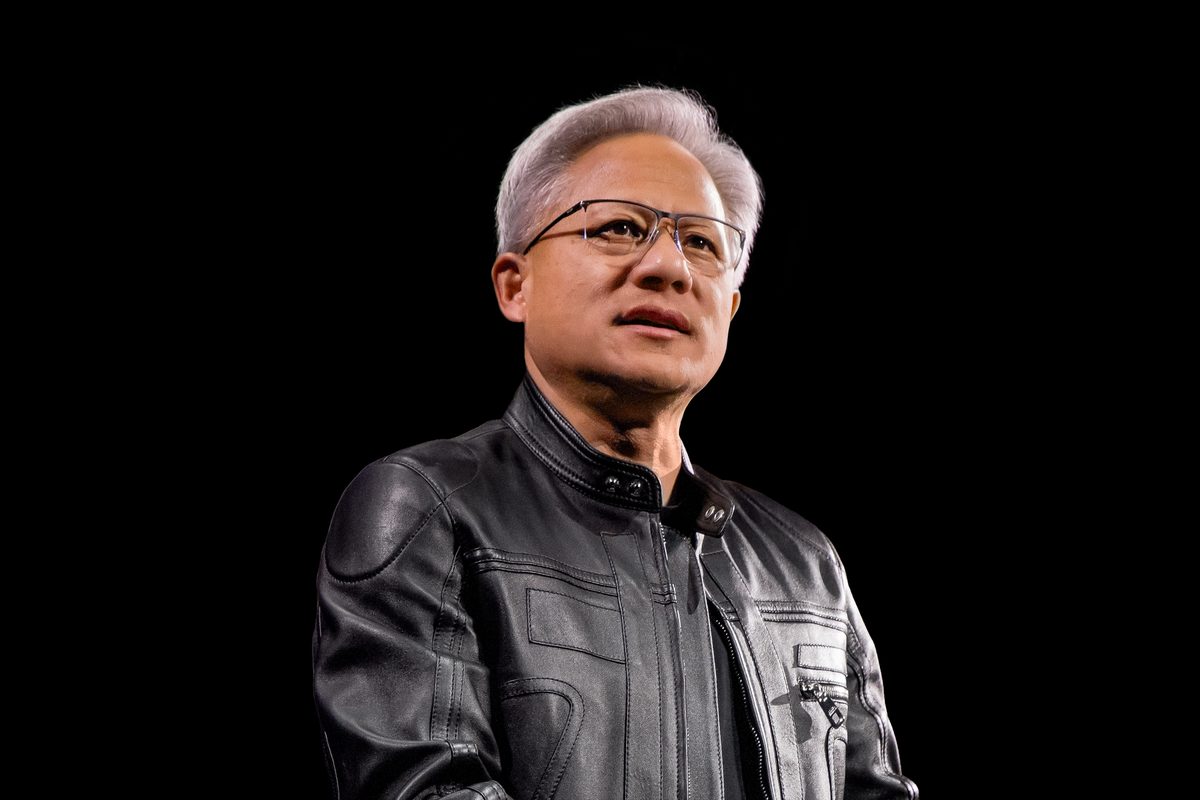

U.S. Senator urges Nvidia CEO to testify over planned approval of AI chip sales to China

U.S. Senator Elizabeth Warren called for Nvidia CEO Jensen Huang and Commerce Secretary Howard Lutnick to testify regarding President Trump’s planned greenlight for sales of Nvidia’s H200 AI chip to China. The request highlights ongoing policy volatility around export controls for advanced AI hardware and the national-security debate over compute access. For Nvidia and the broader semiconductor ecosystem, such political moves can quickly reshape revenue outlooks, customer allocation strategies, and compliance risk. For AI developers and cloud providers, the policy direction influences where frontier training and inference capacity can be deployed—and which regions face structural compute constraints.

Discussion

Key Players

Advances AGI Timeline

This trend may accelerate progress toward AGI

Cerebras’ IPO reflects growing demand for specialized AI chips amid a competitive landscape dominated by Nvidia. Meanwhile, Nvidia's acquisitions and model releases highlight its strategy to integrate hardware and software for a seamless AI experience. This consolidation points to an escalating arms race where control over AI infrastructure becomes a critical differentiator.

Timeline

3 eventsSynvo AI partners with SBG for enterprise AI in Indonesia

Partnership aims to commercialize AI solutions in Southeast Asia, marking a notable expansion in the region.

GIBO partners with E Total to build NVIDIA-powered AI compute centers

Strategic collaboration announced to deploy AI compute centers, indicating significant business development.

Nvidia acquires SchedMD to enhance AI data-center software stack

Acquisition of SchedMD strengthens Nvidia's position in AI data-center software, indicating a major strategic move.