This trend is no longer active

This trend was archived on Dec 17, 2025 as it is no longer seeing new developments.

Big Tech's Energy Play Reshapes AI Landscape

Main Take

As major players like Meta, Microsoft, and Apple engage in trading electricity to power AI data centers, a strategic trend emerges where tech companies are diversifying into energy markets to secure their computational needs. This shift indicates a significant evolution in how AI infrastructure is managed, suggesting a future where energy procurement becomes as critical as hardware acquisition, potentially disrupting traditional energy sectors and creating new opportunities for energy innovation.

Related Articles (15)

Cambricon plans to triple AI chip output to fill Nvidia’s gap in China

Beijing-based Cambricon Technologies is preparing to more than triple production of its AI accelerator chips in 2026, targeting around 500,000 units—including up to 300,000 of its latest Siyuan 590 and 690 processors—in an effort to capture market share left open by U.S. sanctions on Nvidia. The company intends to rely largely on Semiconductor Manufacturing International Corporation’s 7‑nanometre ‘N+2’ process, underscoring how China’s domestic chip ecosystem is scaling to support large‑model training despite export controls.

Nvidia’s Jensen Huang meets Trump on AI chip controls and attacks state-level AI rules

Nvidia CEO Jensen Huang met with President Trump and Republican senators on Capitol Hill to discuss export controls on advanced AI chips, saying he supports federal export control policy but warning against state-by-state AI regulation, which he called a national security risk. Coverage in U.S. and Indian outlets highlights Huang’s push for a single federal AI standard that preserves U.S. competitiveness while allowing Nvidia to continue selling powerful AI processors globally, including to China, under controlled conditions.

Mistral launches open‑weight 'frontier AI family' to challenge DeepSeek and US rivals

French startup Mistral released a four‑model "frontier AI family," including a 675‑billion‑parameter sparse Mixture‑of‑Experts model dubbed Mistral Large 3, all under the permissive Apache 2.0 open‑weight license. The models, trained on thousands of Nvidia H200 GPUs, are pitched as state‑of‑the‑art multimodal and multilingual systems that enterprises can run and fine‑tune locally, giving privacy‑sensitive European customers an alternative to Chinese lab DeepSeek and US closed‑weight offerings from OpenAI and Google.

Nvidia says new AI server boosts Chinese mixture‑of‑experts models tenfold

Nvidia released benchmark data showing its latest AI server, which packs 72 of its top chips into a single system, can deliver roughly a 10x performance gain when serving large mixture‑of‑experts models such as Moonshot AI’s Kimi K2 Thinking and DeepSeek’s models. The results aim to show that even as some new models train more efficiently, Nvidia’s high‑end servers remain critical for large‑scale inference, reinforcing its dominance against rivals like AMD and Cerebras in the AI deployment market.

The AI frenzy is driving a memory chip supply crisis

Reuters reports that an acute global shortage of memory chips is emerging as tech giants race to build AI data centers, diverting capacity into high-bandwidth memory for GPUs and away from traditional DRAM and flash used in consumer devices. Major AI players including Microsoft, Google, ByteDance, OpenAI, Amazon, Meta, Alibaba and Tencent are scrambling to secure supply from Samsung, SK Hynix and Micron, with SK Hynix warning the shortfall could last through late 2027, potentially delaying AI infrastructure projects and adding inflationary pressure worldwide.

Vinci emerges from stealth with $46M to accelerate physics‑driven AI chip simulation

Silicon Valley startup Vinci has come out of stealth with a physics‑driven AI platform that it claims can run chip and hardware simulations up to 1,000x faster than traditional finite element analysis tools, without training on customer data. The company disclosed $46 million in total seed and Series A funding led by Xora Innovation and Eclipse, with backing from Khosla Ventures, to expand deployments at leading semiconductor manufacturers.

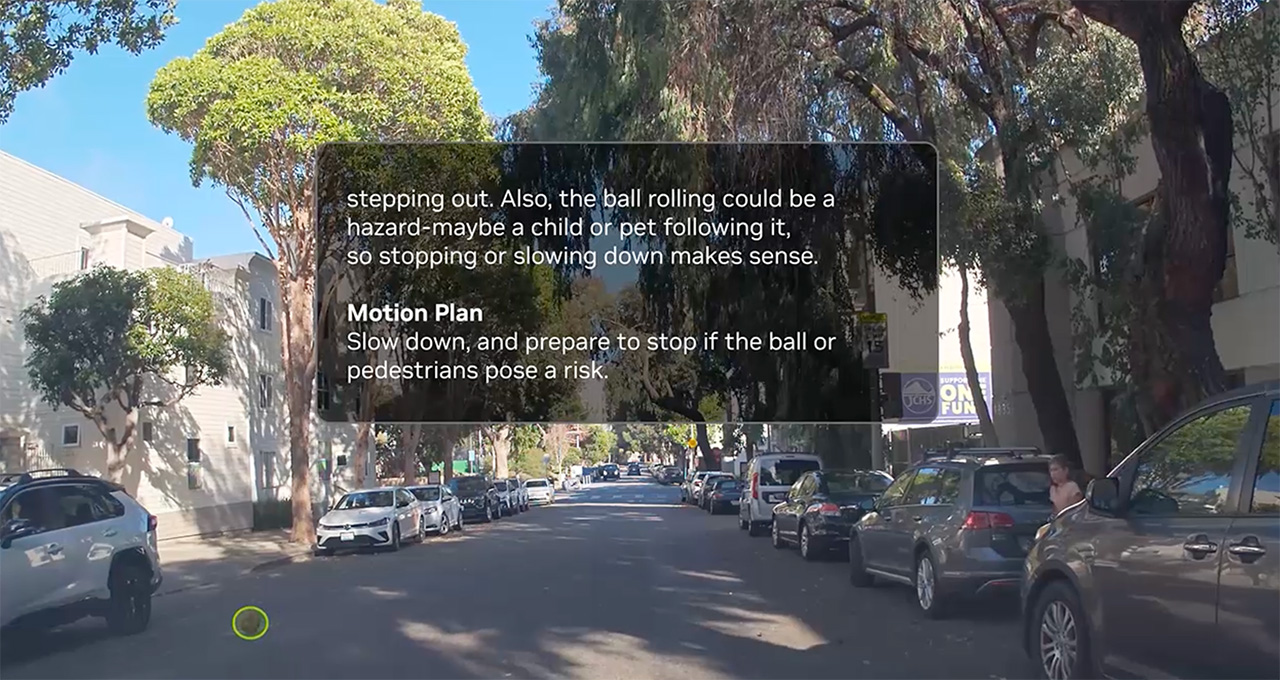

Nvidia releases open Alpamayo-R1 self-driving model and expands open AI toolset at NeurIPS

Nvidia announced Alpamayo-R1, an open, industry-scale vision‑language‑action model for autonomous driving that uses chain‑of‑thought reasoning to explain and plan its driving decisions, along with new open models and datasets for speech and AI safety. The company is releasing Alpamayo-R1, evaluation tools and parts of the training data on GitHub and Hugging Face, positioning its open ecosystem (including Nemotron and Cosmos world foundation models) as a foundation for research and for partners such as AV developers and robotics firms building physical AI systems.

openEuler unveils first SuperPoD-ready OS and adds AMD, Inspur Cloud and Digital China as members

At the openEuler Summit 2025 in Beijing, the openEuler community announced version 24.03 LTS SP3, its first operating system designed to support SuperPoD-scale AI clusters with features such as global resource abstraction, heterogeneous resource fusion and a unified API for high-performance AI workloads. The community also launched an Arm CCA-based confidential computing solution with partners including Arm, Linaro and Baidu Intelligent Cloud, and welcomed AMD, Inspur Cloud and Digital China as new members—meaning all three major CPU vendors (Intel, Arm and AMD) now back openEuler, strengthening China’s open-source infrastructure for AI and cloud computing.

d-Matrix raises $275M Series C to scale AI inference chip platform

Santa Clara–based d-Matrix closed a $275 million Series C round at a $2 billion valuation to expand its full-stack AI inference platform, which combines Corsair accelerators, JetStream networking and Aviator software for large language model serving. The oversubscribed round, led by a global consortium including BullhoundCapital, Triatomic Capital and Temasek with participation from QIA, EDBI and Microsoft’s M12, will fund global deployments and roadmap advances such as 3D memory stacking to deliver up to 10× faster, more energy‑efficient inference than GPU-based systems. ([theaiinsider.tech](https://theaiinsider.tech/2025/11/29/d-matrix-announces-275m-in-funding-to-power-the-age-of-ai-inference/))

Chinese tech giants train AI models overseas to access Nvidia chips amid U.S. curbs

Major Chinese firms including Alibaba and ByteDance are training their latest large language models in Southeast Asian data centers to access Nvidia chips and navigate U.S. export restrictions, according to the Financial Times reporting cited by Reuters. DeepSeek is cited as an exception, training domestically, while Huawei is said to be collaborating on next‑gen Chinese AI chips. ([reuters.com](https://www.reuters.com/world/china/chinas-tech-giants-move-ai-model-training-overseas-tap-nvidia-chips-ft-reports-2025-11-27/))

Meta in talks to spend billions on Google’s AI chips

Meta is negotiating a multi‑year deal to deploy Google’s Tensor Processing Units (TPUs) in its data centers starting in 2027 and may rent TPUs via Google Cloud as early as 2026. If finalized, the move would diversify Meta’s AI hardware beyond Nvidia and bolster Google’s push to commercialize TPUs, reshaping competitive dynamics in AI compute.

Alphabet nears $4T market cap on AI momentum

Alphabet shares climbed toward a $4 trillion valuation, driven by investor confidence in Google’s AI roadmap, including Gemini and its in‑house AI chips. The rally underscores how expectations around AI products and custom silicon are increasingly shaping megacap tech valuations.

Report: Meta in talks to use Google’s AI chips, pressuring Nvidia shares

Alphabet shares rose and Nvidia slipped after reports that Meta is discussing multi‑billion‑dollar purchases of Google’s Tensor Processing Unit (TPU) chips for data centers starting in 2027, with TPU rentals as early as 2026. If finalized, the deal would mark a strategic win for Google’s AI hardware and intensify competition in the AI accelerator market.

Trump weighing approval for Nvidia’s H200 AI chip sales to China

The Trump administration is considering allowing Nvidia to sell its H200 AI accelerators to China, signaling a potential softening of export curbs after a recent trade-tech truce. Any approval would reopen a major market for Nvidia while intensifying debate over U.S. national security and AI leadership.

Meta moves to trade electricity to secure AI data center power

Meta is seeking federal approval to trade wholesale electricity so it can underwrite long-term power from new plants for its AI data centers and resell excess on wholesale markets. The move, which Microsoft is also pursuing and Apple already has approval for, highlights how the AI compute boom is pushing big tech deeper into energy markets.

Discussion

Key Players

Timeline

7 eventsCambricon plans to triple AI chip output

Cambricon's decision to triple production targets a significant market gap left by Nvidia due to U.S. sanctions.

Mistral launches frontier AI family

Mistral's release of a new AI model family is a significant product launch aimed at competing with major rivals.

Nvidia releases new AI server

The launch of Nvidia's new AI server, which significantly boosts performance for mixture-of-experts models, is a major product release.

Vinci raises $46M to accelerate AI chip simulation

Vinci's funding round is significant for its development of a new AI platform, indicating investor confidence.

Nvidia releases Alpamayo-R1 self-driving model

The release of an open self-driving model is a significant advancement in AI technology for autonomous driving.