This trend is no longer active

This trend was archived on Dec 29, 2025 as it is no longer seeing new developments.

AI Governance: The New Frontier of Safety

Main Take

The U.S. government is consolidating AI regulations to avoid a fragmented state-by-state approach. This move aims to enhance competitiveness while ensuring safety standards. South Korea's focus on universal AI literacy reflects a growing recognition that understanding AI is essential for participation in modern society.

Who Should Care

Single regulatory frameworks could streamline compliance costs and accelerate innovation.

A focus on AI safety may lead to new standards in ethical AI development.

Expect shifts in AI deployment practices as regulations evolve.

Related Articles (29)

Militant groups are experimenting with AI, boosting propaganda and cyber risks

An Associated Press report, carried by outlets including The Independent and Halifax’s CityNews, warns that extremist organizations such as the Islamic State group are beginning to use generative AI for recruitment, deepfake propaganda and cyber operations, even if their most ambitious plans remain ‘aspirational’ for now. Analysts say AI image and video tools let small, under‑resourced groups pump out emotionally charged fake content at scale, which can be amplified by social‑media algorithms to radicalize supporters and obscure real‑world atrocities. The story also flags worries that AI could help militants develop biological or chemical weapons by lowering technical barriers, a risk now explicitly mentioned in US homeland security threat assessments. US lawmakers are debating legislation to require annual reviews of AI‑enabled extremist threats and to make it easier for AI companies to share data on abuse patterns, underscoring how AI safety is increasingly merging with counter‑terrorism and cyber‑defense policy debates.

Sina’s AI morning brief spotlights ChatGPT “adult mode”, youth AI usage in Japan and insider‑trading fears around AI prediction markets

Chinese portal Sina’s AI desk published a morning wrap‑up collating overnight global AI stories, ranging from geopolitics to product launches and safety concerns. ([news.sina.com.cn](https://news.sina.com.cn/zx/ds/2025-12-15/doc-inhavser2202625.shtml)) One highlight is OpenAI’s plan to roll out an “adult mode” in ChatGPT in early 2026, with Fidji Simo saying the company is testing an age‑prediction model to automatically detect under‑18 users and gate explicit content—essentially building an AI bouncer into the chatbot. ([news.sina.com.cn](https://news.sina.com.cn/zx/ds/2025-12-15/doc-inhavser2202625.shtml)) The brief also cites a Japanese LINE Yahoo survey showing that 15‑ to 24‑year‑olds most often use AI for casual web queries and homework help, with young women disproportionately turning to chatbots for advice and emotional support, underscoring how quickly AI is becoming a social confidant. ([tech.sina.cn](https://tech.sina.cn/2025-12-14/detail-inhauuyz4808361.d.html)) Other items include Google’s Gemini‑powered real‑time earbud translation pilot in the U.S., Mexico and India, and a spate of “insider trading” allegations on crypto prediction market Polymarket after traders made million‑dollar wins by betting on the exact timing of OpenAI’s GPT‑5.2 launch and Google’s year‑end trends lists—prompting some tech firms to ban employees from such platforms. ([tech.sina.cn](https://tech.sina.cn/2025-12-14/detail-inhauuyz4818034.d.html)) Overall, the brief paints a picture of AI seeping into everyday life while regulators and companies scramble to close gaps in content moderation, youth safety and information‑leak risks.

White House adviser says US will push Congress for a single national AI regulatory framework

The White House said it plans to work with Congress to create a single, nationwide framework to regulate AI, arguing that a patchwork of state rules could slow down deployment and weaken US competitiveness. The comments came from White House adviser Sriram Krishnan in an interview, framing federal action as both pro-innovation and strategically necessary. The deeper subtext is that Washington is trying to stabilize the policy surface area for frontier-model builders and downstream adopters—reducing compliance fragmentation while keeping leverage for national-security guardrails. If this effort turns into legislation (not just executive actions), it could reshape how enterprise AI is rolled out across highly regulated sectors like finance, healthcare, and critical infrastructure.

South Korea’s president calls for nationwide AI literacy, pushing mass education and broader access

South Korea’s president publicly urged that AI literacy be taught as universally as reading and arithmetic, arguing that everyday life will soon require baseline competence in AI tools. In a government briefing, the science/ICT minister noted many citizens still don’t know how to use AI—prompting the president to emphasize rapid, broad-based education and more accessible learning environments. The plan discussed includes expanding digital learning centers and rolling out practical AI education initiatives, starting with students and vulnerable groups. Why it matters: as AI agents and copilots become default interfaces for services, governments that treat AI literacy as infrastructure (not just workforce training) could accelerate domestic adoption—raising competitive pressure on consumer AI platforms, local model providers, and public-sector AI procurement across the region.

Trump signs executive order aimed at curbing state AI laws in favor of a national standard

President Donald Trump signed an executive order intended to push back on what the administration calls the most “onerous” state-level AI regulations, arguing that a patchwork of rules across 50 states could slow innovation and investment. The order sets up a path for federal action—via legal challenges and agency reviews—to preempt or contest state measures, while claiming it will not oppose child-safety-related rules. This is a major governance pivot for the U.S. AI ecosystem: it shifts the battlefield from state legislatures toward federal agencies, courts, and ultimately Congress, raising the stakes for national standards on transparency, risk mitigation, and model accountability. For AI companies, a single federal regime could reduce compliance fragmentation—but it could also harden into a high-impact procurement and enforcement framework if the federal government becomes more prescriptive. The strategic subtext is international: the administration explicitly frames regulatory speed as part of competing with China, making AI policy a competitiveness tool rather than purely a consumer-protection tool.

AI is transforming childhood in classrooms and at home, The Economist warns

A feature syndicated from The Economist argues that artificial intelligence is rapidly reshaping childhood by becoming embedded in education, games and digital companions. While AI tutors and adaptive learning tools from platforms such as Khan Academy, Google, OpenAI and Microsoft can personalize instruction, researchers warn that over-reliance may undermine critical thinking, blur academic integrity and alter children’s emotional development and relationships.

![[포토] 인공지능(AI) 제정법 관련 입법 공청회](https://image.newdaily.co.kr/site/data/img/2025/12/09/2025120900199_0.jpg)

South Korean parliament holds public hearing on proposed AI Basic Law

South Korea’s National Assembly Science, ICT, Broadcasting and Communications Committee held a public hearing on a draft ‘AI Basic Law’ in Seoul, signaling momentum toward a comprehensive legal framework for artificial intelligence. Lawmakers and experts discussed how to balance innovation with safeguards around data use, accountability and the social impact of AI systems.

Trump to issue order creating national AI rule

U.S. President Donald Trump said he will sign an executive order this week to create a single federal "One Rule" framework for artificial intelligence, aiming to preempt a growing patchwork of state-level AI regulations. The move is backed by major tech and AI firms that argue divergent state rules would stifle innovation, but is drawing bipartisan concern from states and lawmakers who see it as an overreach that weakens local protections and oversight.

Top scientists converge in Guangxi to boost AI-driven high-quality development

A Xinhua report describes a recent high-level talent event in China’s Guangxi region where 28 academicians and national experts met to promote the integration of artificial intelligence into local industries. The initiative aims to inject new momentum into Guangxi’s AI and related sectors by fostering joint projects, talent pipelines and application pilots aligned with China’s broader digital and AI development strategy.

Japan, US, Australia, Canada and UK plan closed-door talks in Tokyo on AI and 6G to counter China

Chinese outlet Guancha, citing Nikkei Asia, reports that Japan, the US, Australia, Canada and the UK will hold a closed-door meeting in Tokyo to coordinate on artificial intelligence and 6G telecommunications standards, explicitly framed as an effort to counter China’s influence in global telecoms. The talks are expected to cover AI use in Open RAN and broader telecom infrastructure, underscoring how AI and next‑generation networks are becoming central to geopolitical standard‑setting and techno‑industrial blocs.

UK China Affairs Committee delegation visits Tsinghua’s Institute for AI International Governance

Beijing Academy of Artificial Intelligence’s community hub reports that a delegation from the UK National Committee on China Affairs visited Tsinghua University’s Institute for AI International Governance to discuss topics including AI governance, frontier model risk and global cooperation mechanisms. The two sides compared national AI strategies and regulatory approaches and explored deeper collaboration on responsible AI, reflecting ongoing cross-border dialogue on AI norms despite broader geopolitical tensions.

Chinese cybersecurity symposium spotlights generative AI safety and data‑driven policing

The Fourth Cybersecurity Research and Development Symposium, held at Jiangxi Police College, focused heavily on generative AI safety, protection of critical information infrastructure and training specialized data‑policing talent. Speakers from police, academia and research institutes described how generative AI can both strengthen capabilities—such as encrypted traffic detection, malware analysis, vulnerability management and threat intelligence—and introduce new risks that require integrated 'professional + mechanism + big data' approaches, underscoring China’s effort to embed AI governance and security into law‑enforcement practice.

Tengchong Scientists Forum AI sub‑forum focuses on 'AGI’s next paradigm' and AI4S initiatives

At the 2025 Tengchong Scientists Forum in Yunnan, China, an AI sub‑forum themed “AGI’s Next Paradigm” brought together scientists, academics and industry leaders to discuss breakthroughs in general intelligence, AI for science and industrial applications. Hosted by China Mobile’s Yunnan subsidiary, the event launched the Jiutian 'Renewing Communities' youth AI scientist support program and the AI4S 'Model Open Space' cooperation plan, which will build the 'Tiangong Zhiyan' scientific AI workstation and unveil new AI applications including a mental‑health agent and dual‑intelligent city projects, signaling China’s push to link frontier AGI research with large‑scale compute and real‑world deployments.

Latin American legislative leaders agree common principles for AI regulation in justice and security

Meeting in Puerto Rico under the FOPREL forum, presidents and senior representatives of legislative bodies from Central America, Mexico and the Caribbean agreed on basic parameters for regional laws governing the use of AI algorithms in justice and security. The accord calls for AI deployments to align with human rights, local laws and democratic protections, and to facilitate secure cross-border data sharing while preserving institutional integrity.

Experts at Doha Forum urge new global rules to govern AI in warfare

At the Doha Forum 2025, a panel of international experts warned that rapidly advancing military uses of AI—especially in nuclear command and autonomous weapons—require urgent global rules to ensure accountability and human control. Speakers argued that AI is a “civilizational technology” akin to electricity and called for governance frameworks like the REAIM commission’s “Responsible by Design” report to keep military AI aligned with human rights and international law.([koreatimes.co.kr](https://www.koreatimes.co.kr/amp/foreignaffairs/others/20251206/ai-risks-in-warfare-demand-new-global-rules))

China opens 2025 Tengchong Scientist Forum on ‘Science and AI Changing the World’

The 2025 Tengchong Scientist Forum opened in Yunnan with the theme “Science · AI Changing the World”, gathering hundreds of leading scientists, university heads and entrepreneurs to discuss AI’s role in reshaping research and industry. At the event, China released its first systematic "Technology Foresight and Future Vision 2049" report, which identifies areas including artificial intelligence and general-purpose robots as among ten key technology visions for a 2049 human–machine coexisting smart society.

China blue book urges clear boundaries for AI in cross-border law enforcement

China’s new "Foreign-related Rule-of-Law Blue Book (2025)" warns that accelerated use of AI in global law enforcement is outpacing legal frameworks and calls for明确 rules on how AI can be used in cross‑border policing. The report highlights risks around privacy, national security, algorithmic opacity and cross‑border data flows, and recommends a dedicated regulation on AI in law enforcement, judicial interpretations on AI-generated evidence, and development of interoperable cross-border AI enforcement standards.

Japan drafts basic AI program aiming to raise public usage to 80%

Japan’s government has prepared a draft basic program on AI development and use that targets raising the public AI utilization rate first to 50% and eventually to 80%. The plan also seeks to attract about ¥1 trillion in private-sector investment for AI R&D, positioning AI as core social infrastructure and aiming to close the adoption gap with the US and China.

China’s civil aviation regulator issues ‘AI + Civil Aviation’ plan to deeply integrate AI across the sector by 2030

China’s Civil Aviation Administration has released an Implementation Opinion on promoting high-quality development of "AI + civil aviation," setting targets to make AI integral to aviation safety, operations, passenger services, logistics, regulation and infrastructure planning by 2027, and to achieve broad, deep AI integration with a mature governance and safety system by 2030. The document identifies 42 priority application scenarios—ranging from risk early-warning and intelligent scheduling to smarter logistics and regulatory decision-making—and calls for stronger data, infrastructure platforms and domain-specific models to support the transformation.([ce.cn](https://www.ce.cn/cysc/newmain/yc/jsxw/202512/t20251206_2625091.shtml?utm_source=openai))

Agents-as-a-service poised to reshape enterprise software and corporate structures

A CIO feature argues that autonomous AI agents delivered as “agents-as-a-service” are rapidly emerging on top of traditional SaaS, with more than half of surveyed executives already experimenting with AI agents for customer service, marketing, cybersecurity and software development. Drawing on forecasts from Gartner and IDC, it predicts that by 2026 a large share of enterprise applications will embed agentic AI, shifting user interaction away from individual apps toward cross‑app AI orchestrators and forcing CIOs to rethink pricing, integration and security models. ([cio.com](https://www.cio.com/article/4098664/agents-as-a-service-are-poised-to-rewire-the-software-industry-and-corporate-structures.html))

People’s Daily urges 'long-termist spirit' in China’s AI development

An opinion piece in China’s People’s Daily argues that developing artificial intelligence requires a “long-termist spirit,” urging Chinese entrepreneurs to focus on foundational research, talent cultivation, and resilient industrial chains rather than short-term hype. The article frames AI as a strategic technology for national rejuvenation and calls for coordinated efforts across government, academia and industry to build sustainable advantages.

CBC News tightens AI usage rules, mandating human oversight and transparency for automated content

CBC News has updated its internal guidelines on the use of AI, emphasizing that artificial intelligence is a tool, not the creator, of published content. The policy allows AI for assistive tasks like data analysis, drafting suggestions and accessibility services, but bans AI from writing full articles or creating public-facing images or videos, and requires explicit disclosure to audiences when AI plays a significant role in a story.

Study finds major AI companies' safety practices fall far short of global standards

A new study reported by Reuters concludes that safety practices at major AI firms including Anthropic, OpenAI, xAI and Meta fall "far short" of international best practices, particularly around independent oversight, red-teaming and incident disclosure. The report warns that even companies perceived as safety leaders are not meeting benchmarks set by global governance frameworks, adding pressure on regulators to move from voluntary commitments to enforceable rules.

Study finds major AI companies’ safety practices fall short of emerging global standards

A new edition of the Future of Life Institute’s AI Safety Index concludes that leading AI developers including Anthropic, OpenAI, xAI and Meta lack robust strategies to control potential superintelligent systems, leaving their safety practices "far short" of emerging international norms. The report, based on an independent expert panel, comes amid growing concern over AI‑linked self‑harm cases and AI‑driven hacking, and has prompted renewed calls from researchers such as Max Tegmark, Geoffrey Hinton and Yoshua Bengio for binding safety standards and even temporary bans on developing superintelligence until better safeguards exist.

Anthropic’s Jared Kaplan warns humanity must decide on AI autonomy by 2030

Anthropic chief scientist Jared Kaplan told The Guardian, in comments reported by Indian media, that humanity faces a critical choice by around 2030 on whether to allow AI systems to train and improve themselves autonomously, potentially triggering an "intelligence explosion" or a loss of human control. Kaplan also predicted that many blue‑collar jobs and even school‑level cognitive tasks could be overtaken by AI within two to three years, urging governments and society to confront the trade‑offs of super‑powerful AI while there is still time to set governance boundaries.

AWS unveils frontier AI agents that act as autonomous software team-mates

Amazon Web Services introduced a new class of "frontier agents"—Kiro autonomous agent, AWS Security Agent and AWS DevOps Agent—designed to work as autonomous teammates that can run for hours or days handling coding, security and operations tasks with minimal human oversight. The agents integrate with common developer and ops tools (GitHub, Jira, Slack, CloudWatch, Datadog, etc.) and are pitched as a step-change from task-level copilots toward fully agentic systems embedded across the software lifecycle. ([aboutamazon.com](https://www.aboutamazon.com/news/aws/amazon-ai-frontier-agents-autonomous-kiro))

World Economic Forum outlines governance blueprint for enterprise AI agents

The World Economic Forum has published guidance on how organisations should classify, evaluate, and govern AI agents as they move from prototypes to autonomous collaborators in business and public services. The framework emphasises agent "resumes", contextual evaluation beyond standard ML benchmarks, risk assessment tied to autonomy and authority levels, and progressive governance that scales oversight with agent capability.

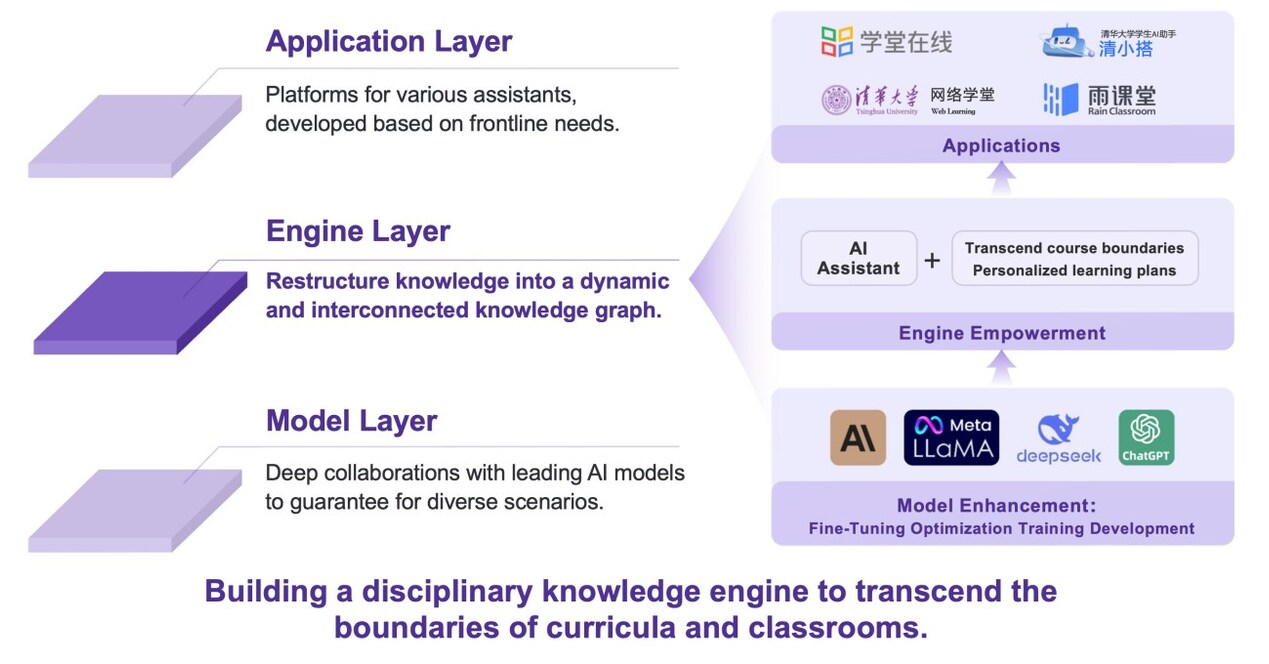

Tsinghua University issues first campus‑wide framework for using AI in teaching and research

Tsinghua University has released guiding principles that set detailed rules for how students and faculty may use artificial intelligence in education and academic work, described as the institution's first comprehensive, university-wide AI governance framework. The guidelines emphasise AI as an auxiliary tool, mandate disclosure of AI use, ban ghost‑writing and plagiarism with AI, and address data security, bias and the digital divide as AI becomes embedded in classrooms and labs.

DeepSeek and Alibaba researchers back China’s AI regulatory approach in new Science paper

Researchers from DeepSeek and Alibaba co-authored a paper arguing China’s emerging AI governance framework is innovation-friendly but would benefit from a national AI law and clearer feedback mechanisms. The endorsement highlights Beijing’s push to position its model as pragmatic and open-source friendly as Chinese AI systems gain global traction.

Discussion

Neutral Impact

This trend has minimal direct impact on AGI timeline

The U.S. government is consolidating AI regulations to avoid a fragmented state-by-state approach. This move aims to enhance competitiveness while ensuring safety standards. South Korea's focus on universal AI literacy reflects a growing recognition that understanding AI is essential for participation in modern society.

Timeline

7 eventsTrump signs executive order for national AI regulations

This executive order aims to create a single federal framework for AI regulation, impacting innovation and investment.

White House plans national AI regulatory framework

The announcement indicates a significant shift towards a unified approach to AI regulation in the US.

Trump to issue order creating national AI rule

The upcoming executive order is aimed at establishing a federal standard for AI, addressing state-level regulatory discrepancies.

Latin American leaders agree on AI regulation principles

This agreement establishes foundational guidelines for AI use in justice and security across several countries.

Experts call for global AI rules in warfare at Doha Forum

The call for new global rules addresses urgent concerns regarding AI's military applications, which could shape future regulations.