TCL and Microsoft unveil AI-powered smart terminals at CES 2026

TL;DR

On January 8, 2026, TCL announced at CES in Las Vegas that it is rolling out a portfolio of AI-powered smart terminals—TVs, phones, tablets, wearables and home appliances—built on Microsoft Azure Speech and Azure OpenAI “Foundry Models”. The companies plan phased regional launches of Copilot-enabled, multimodal features like real-time translation, personalized recommendations and cross-device agents throughout 2026.

About this summary

This article aggregates reporting from 2 news sources. The TL;DR is AI-generated from original reporting. Race to AGI's analysis provides editorial context on implications for AGI development.

Race to AGI Analysis

This TCL–Microsoft tie‑up is a concrete example of how foundation models are seeping into everyday hardware at scale. By wiring Azure OpenAI and Copilot into TVs, phones and home appliances, TCL is effectively turning its installed base into a massive, real‑world testbed for multimodal agents that live in the OS layer, not just in apps. That has huge implications for user data, personalization and how quickly conversational interfaces can become the default way people interact with their devices.

Strategically, Microsoft gains another high‑volume OEM to extend its AI stack beyond PCs and cloud, shoring up its position against Google’s Gemini‑powered Android ecosystem and Samsung’s Galaxy AI ambitions. For Chinese OEMs like TCL, leaning on U.S. cloud AI rather than solely domestic models is a way to differentiate globally while still hedging regulatory risk. The more homes are filled with devices that can listen, see and act across contexts, the closer we move toward ambient AI—an environment in which an eventual AGI could be continuously engaged with people’s workflows rather than confined to a chat window.

Who Should Care

Companies Mentioned

Related News

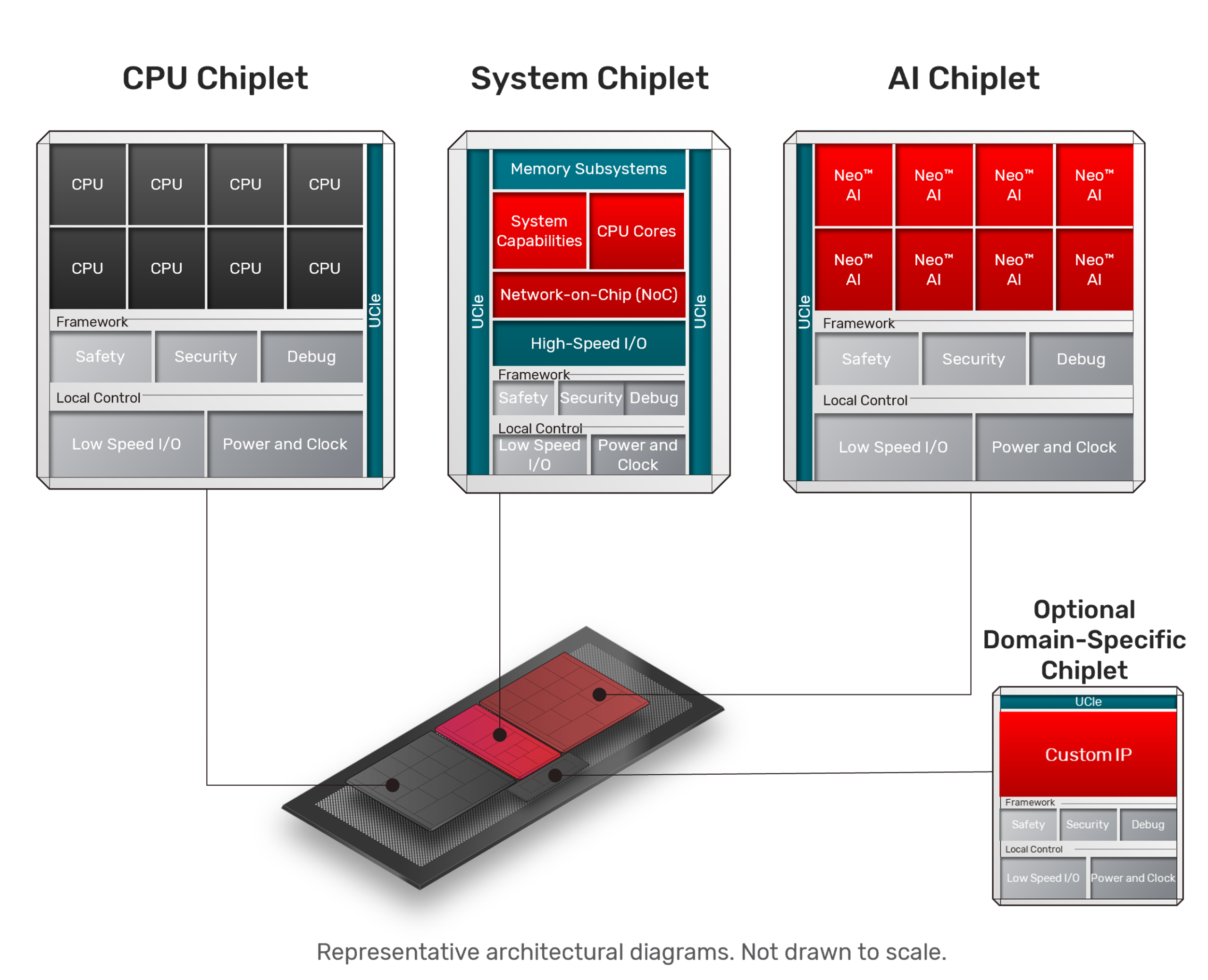

Cadence touts pre‑validated AI chiplet platform to speed custom silicon

TodayCyberPress flags 91,000 attacks probing AI deployments worldwide

Today

Yuwell showcases AI health ring and wearables at CES 2026 debut

Today