AI-fueled DRAM shortage hits CES 2026 smart devices

TL;DR

An article from Mathrubhumi’s tech desk on January 9, 2026 reports that CES exhibitors are grappling with an acute shortage of DRAM and storage chips as AI features spread from data centers into consumer devices. Industry executives and IDC analysts warn that the AI-driven memory crunch is raising component prices, forcing OEMs to cut features or redesign products, and could extend into next year.

About this summary

This article aggregates reporting from 2 news sources. The TL;DR is AI-generated from original reporting. Race to AGI's analysis provides editorial context on implications for AGI development.

Race to AGI Analysis

The CES floor now has a weird split personality: glossy AI‑powered gadgets out front, and behind the scenes, vendors quietly panicking over DRAM allocation. The Mathrubhumi piece makes clear that AI’s appetite for memory has spilled out of data centers and into everything from laptops to smart rings, putting sustained upward pressure on DRAM prices and forcing trade‑offs between capability, cost and battery life. In effect, we’re seeing a replay of the GPU crunch, but for a component that touches virtually every computing device.

For the race to AGI, this matters because memory constraints can become a real bottleneck on deploying large models at the edge and in low‑margin devices. If DRAM stays scarce and expensive, OEMs may favor smaller, distilled models and more efficient architectures, accelerating research into sparsity, quantization and retrieval‑augmented setups. It also underscores how fragile the hardware stack is: a big model breakthrough is meaningless if you can’t afford to ship enough RAM in the products that users actually touch. Expect more vertical integration and long‑term supply deals between AI labs, chipmakers, and device brands as everyone tries to secure their slice of the memory pool.

Who Should Care

Related News

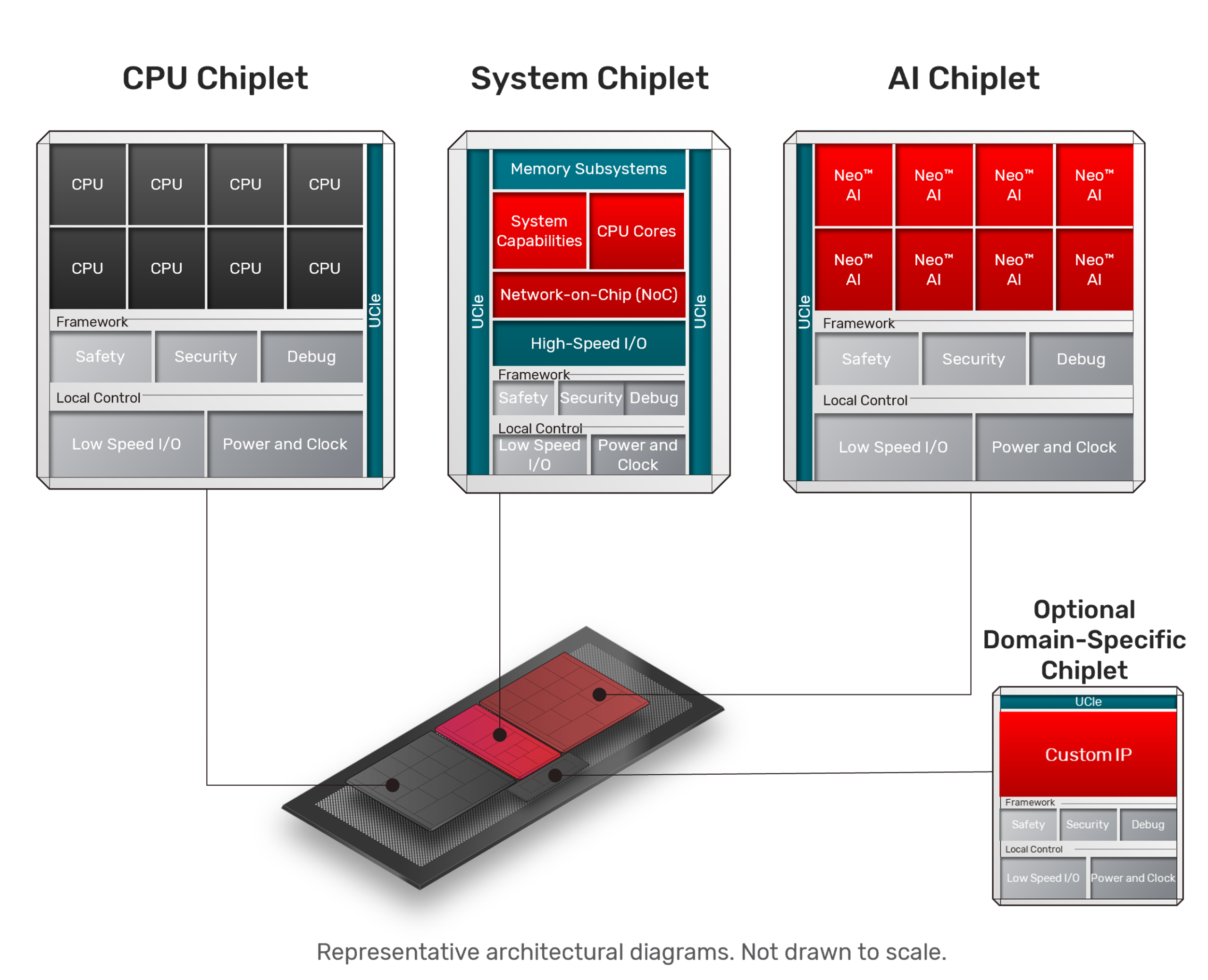

Cadence touts pre‑validated AI chiplet platform to speed custom silicon

TodayCyberPress flags 91,000 attacks probing AI deployments worldwide

Today

Yuwell showcases AI health ring and wearables at CES 2026 debut

Today