LeCun leaves Meta to launch Advanced Machine Intelligence Labs

TL;DR

Meta’s longtime chief AI scientist Yann LeCun is leaving the company to co‑found Advanced Machine Intelligence Labs, a new startup pursuing alternatives to large language models. In a January 3, 2026 interview, he alleged Meta’s Llama 4 benchmarks were “fudged” and criticized the company’s LLM‑centric strategy while detailing his new world‑model‑based research agenda.

About this summary

This article aggregates reporting from 2 news sources. The TL;DR is AI-generated from original reporting. Race to AGI's analysis provides editorial context on implications for AGI development.

Race to AGI Analysis

LeCun’s exit from Meta formalizes a split many in the field have sensed for years: between those betting everything on ever‑larger language models and those who think LLMs are a dead end for superintelligence. At Meta he could argue for alternative architectures like V‑JEPA, but he couldn’t control the product roadmap; at AMI Labs, he gets a clean slate to build world‑model‑centric systems that learn from video and interaction rather than just text.([the-decoder.com](https://the-decoder.com/you-certainly-dont-tell-a-researcher-like-me-what-to-do-says-lecun-as-he-exits-meta-for-his-own-startup/))

Strategically, this weakens Meta at an awkward moment. The company is already under pressure after Llama 4 under‑delivered relative to OpenAI and Google, and LeCun’s public claim that benchmarks were “fudged” undermines confidence in its whole evaluation culture. At the same time, his new lab adds another well‑funded, theory‑driven competitor to the frontier research landscape—one that may attract disillusioned researchers from Big Tech who still want to chase AGI but with a different paradigm. If AMI Labs can turn world‑model rhetoric into working agents that outperform LLM‑scaled systems on planning and grounded reasoning, that could force the rest of the industry to broaden its notion of what counts as “state of the art.”

Who Should Care

Companies Mentioned

Related News

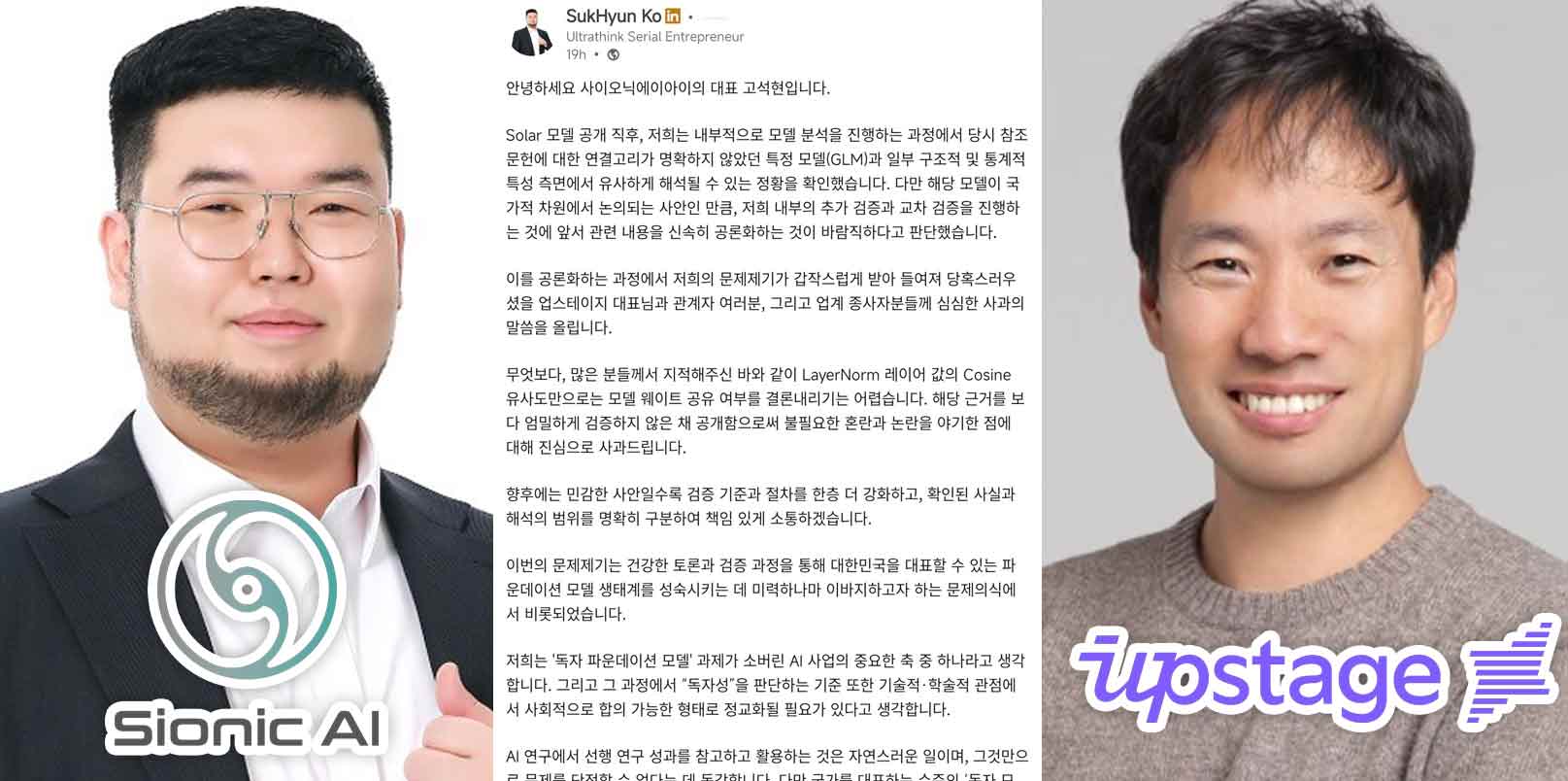

Sionic AI CEO apologises for unproven plagiarism claim against Upstage model

Today

Nvidia–Groq and Meta–Manus deals herald 2026 AI consolidation wave

Today

Taiwan AI supply chain powers manufacturing PMI to 18‑month high

Today