Anthropic Claude Opus 4.5 hits 4h49m METR long-horizon mark

TL;DR

The AI evaluation group METR reports that Anthropic’s Claude Opus 4.5 achieves a 50% “time horizon” of roughly 4 hours 49 minutes on long coding tasks, the highest score it has published so far. At an 80% success threshold, the time horizon drops to around 27 minutes, highlighting continuing reliability gaps on very long tasks.

About this summary

This article aggregates reporting from 3 news sources. The TL;DR is AI-generated from original reporting. Race to AGI's analysis provides editorial context on implications for AGI development.

Race to AGI Analysis

METR’s new result for Claude Opus 4.5 is one of the clearest quantitative signals yet that long‑horizon agent performance is climbing fast. A 50% time horizon of 4 hours 49 minutes means that, on METR’s coding tasks, Opus can often stay coherent and productive over nearly five hours of human‑estimated work—more than double earlier Claude variants and ahead of prior leaders on the same benchmark. Commentators analyzing METR’s updated curves argue that effective horizons may now be doubling every few months rather than every half‑year, implying “workday‑length” autonomy sometime in 2026 if current trends hold. ([the-decoder.com](https://the-decoder.com/anthropics-claude-opus-4-5-can-tackle-some-tasks-lasting-nearly-five-hours/))

For the race to AGI, this is less about one model “being AGI” and more about the shape of the curve. If agents can reliably tackle multi‑hour projects with only light supervision, you start to see real substitution in software engineering, data work, and ops—and a lot more experimentation with stacked, self‑verifying agent systems. The catch is reliability: at an 80% success threshold, Opus 4.5’s horizon collapses to ~27 minutes, roughly on par with some OpenAI models, underscoring how brittle very long runs remain. That gap between “can sometimes do it” and “almost always does it” is exactly where safety, verification, and orchestration research will determine whether these systems become dependable teammates or just spectacular but flaky tools. ([ai-primer.com](https://ai-primer.com/en/engineer/reports/2025-12-20?utm_source=openai))

Who Should Care

Companies Mentioned

Related Deals

DOE signed nonbinding MOUs with 24 AI and compute organizations to apply advanced AI and high-performance computing to Genesis Mission scientific and energy projects.

OpenAI, Anthropic, Block and major cloud providers are co-founding the Agentic AI Foundation under the Linux Foundation to steward open, interoperable standards for AI agents.

Founding members created the Agentic AI Foundation under the Linux Foundation to fund and govern open standards like MCP, goose and AGENTS.md for interoperable agentic AI.

Accenture and Anthropic formed a new business group and training program around Claude to bring production-grade AI services to tens of thousands of Accenture staff and clients.

Accenture and Anthropic entered a multi‑year strategic partnership to co‑invest in a dedicated business group, training 30,000 Accenture staff on Claude and co‑developing AI solutions for regulated industries.

Related News

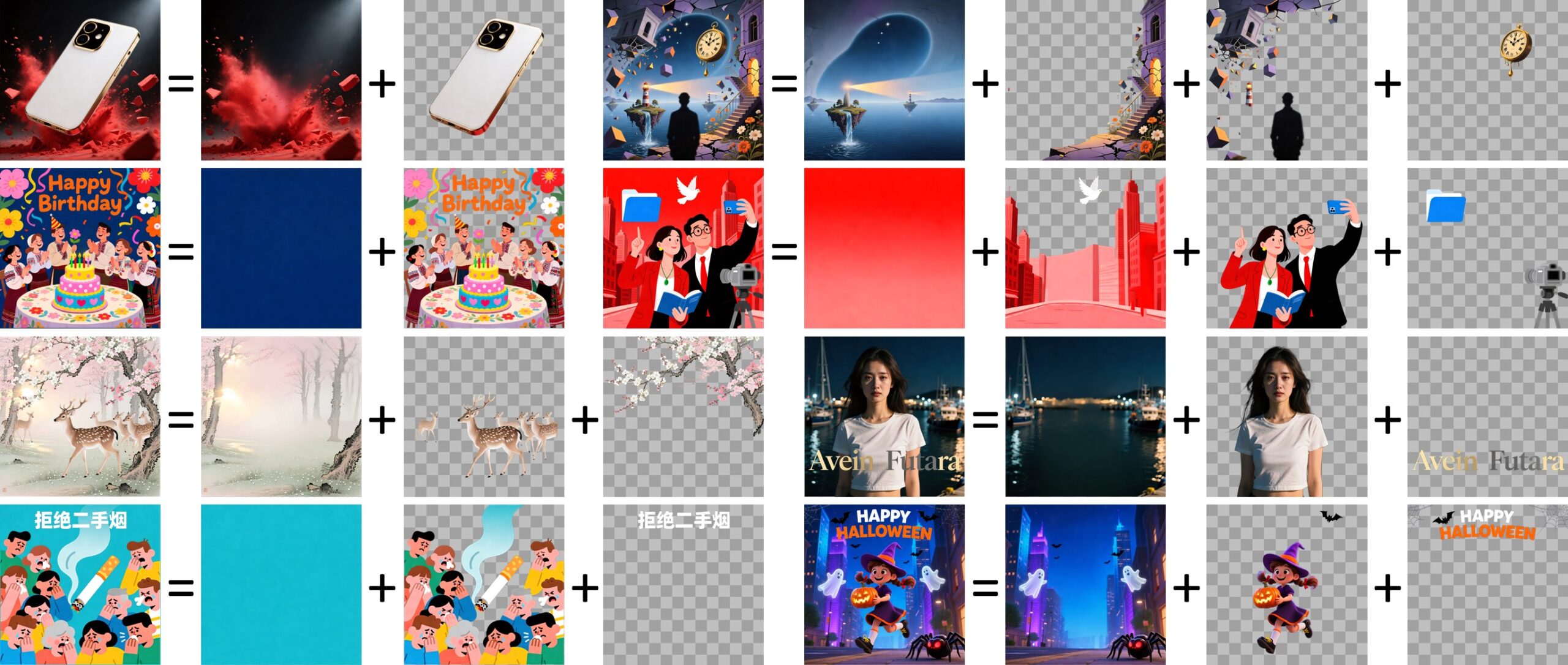

Alibaba Qwen unveils Qwen-Image-Layered for editable AI images

Today

Yunus Emre Institute plans AI platform to scale Turkish language learning

Today

UCLA AI tool flags undiagnosed Alzheimer’s with 80% sensitivity

Today