OpenAI audio model bets on real-time voice and Jony Ive hardware

TL;DR

On January 5, 2026, multiple tech outlets reported that OpenAI is preparing a new audio-model architecture for release by the end of Q1 2026, capable of speaking while users talk and handling natural interruptions. The model is reportedly tied to an audio-first personal device designed by Jony Ive’s team and targeted for 2026–2027.

About this summary

This article aggregates reporting from 4 news sources. The TL;DR is AI-generated from original reporting. Race to AGI's analysis provides editorial context on implications for AGI development.

Race to AGI Analysis

If these reports are accurate, OpenAI is making its first big architectural bet since GPT‑5 by targeting end‑to‑end audio as a primary interface, not an add‑on. A model that can listen and speak simultaneously with sub‑second latency, while running on a dedicated hardware device, would move AI closer to feeling like a human conversational partner rather than a turn‑based chatbot.([byteiota.com](https://byteiota.com/openai-audio-model-q1-2026-post-screen-ai-or-hype/?utm_source=openai))

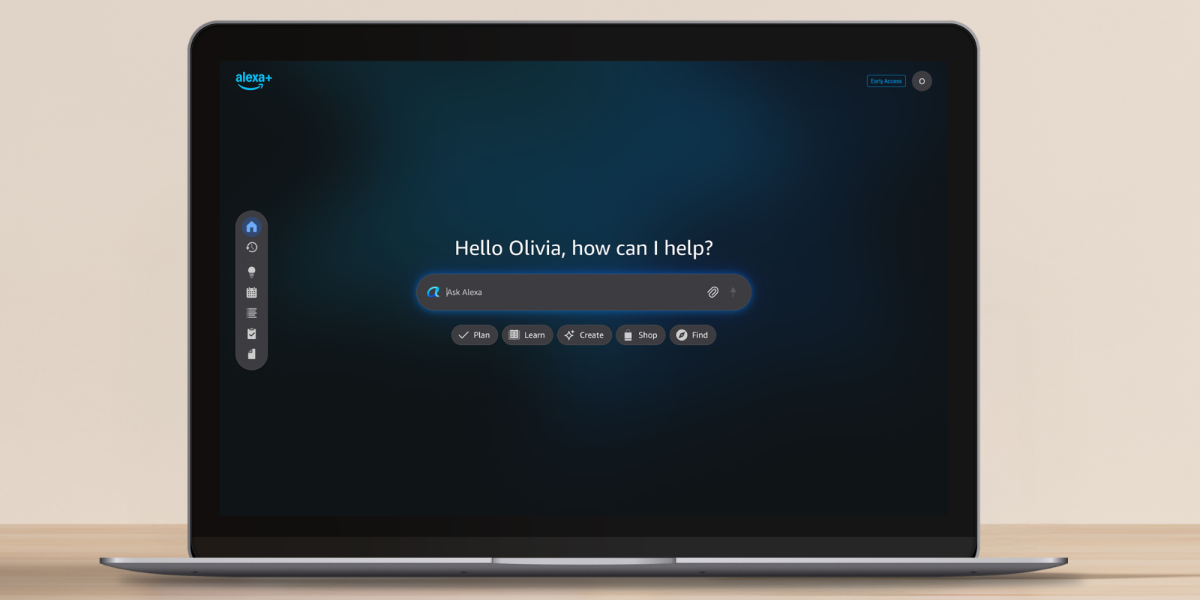

Strategically, this is a play to own the post‑screen interface layer. A Jony Ive–designed, audio‑first companion tied deeply into OpenAI’s stack could become the “iPhone moment” for ambient AI—especially if it ships before rivals like Meta, Google, or Tesla nail comparable voice-native form factors. That would give OpenAI direct distribution into consumers’ daily lives instead of relying solely on APIs and partner apps.([voice.lapaas.com](https://voice.lapaas.com/openai-first-ai-device-pen-like/?utm_source=openai))

From an AGI perspective, shifting serious talent and compute toward audio raises the ceiling on how much cognitive work can be automated in real time: customer support, coding assistance, research workflows, even creative collaboration. It also introduces new safety frontiers—continuous sensing, always‑on microphones, and persuasive, emotionally aware speech—that are harder to monitor than text. The absence, so far, of a matching public safety roadmap around this audio push is notable.

Who Should Care

Companies Mentioned

Related News

AMD and others push AI PCs and physical AI at CES 2026

Today

Samsung showcases Gemini‑powered AI home ecosystem at CES 2026

Today

Nvidia unveils Rubin AI supercomputer platform for next‑gen AGI workloads

Today