Domain-aware quantum circuit boosts ML on NISQ hardware

TL;DR

Quantum Zeitgeist reports on a new "Domain-Aware Quantum Circuit" (DAQC) that uses image-structure priors to improve quantum machine learning performance on today’s noisy intermediate-scale quantum (NISQ) devices. The research team shows DAQC matching strong classical baselines on image benchmarks while using only 16 qubits and a few hundred trainable parameters, and claims best-in-class real-hardware QML results.

About this summary

This article aggregates reporting from 2 news sources. The TL;DR is AI-generated from original reporting. Race to AGI's analysis provides editorial context on implications for AGI development.

Race to AGI Analysis

The DAQC work is another datapoint that quantum machine learning is edging from hype toward concrete, if still early, advantages. By encoding local image structure directly into the layout and connectivity of a 16-qubit circuit, the authors sidestep some of the barren plateau issues that plague generic parameterized quantum circuits. They report competitive accuracy versus classical CNNs like ResNet and EfficientNet on MNIST-style datasets while dramatically cutting parameter counts and input resolution.

For AGI watchers, the immediate impact is modest—these are still toy-scale problems—but the direction of travel is important. If hardware and circuit design continue to co-evolve, we could eventually see hybrid classical–quantum training loops where quantum accelerators handle specific high-cost subroutines inside very large models. That’s particularly relevant for sampling, combinatorial optimization, or structured generative tasks. In a world where classical scaling runs into energy and cost walls, even a narrow but reliable quantum speedup for key workloads could effectively extend the compute frontier that AGI labs rely on.

Who Should Care

Companies Mentioned

Related News

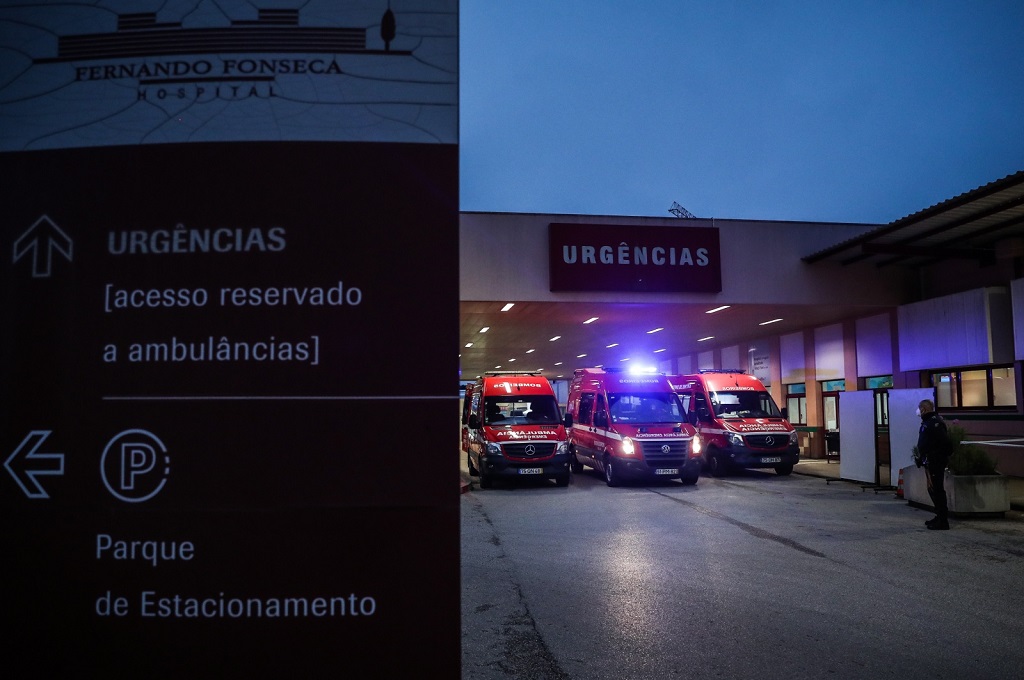

Portugal hospital deploys AI triage pilot to cut ER wait times by an hour

Today

Microsoft CoreAI project targets AI translation of legacy Windows code

Today

Google Disco AI browser turns tabs into custom web apps

Today