Apple SHARP model turns single photos into 3D scenes in under a second

TL;DR

Apple-linked researchers have released SHARP, an AI model that reconstructs photorealistic 3D scenes from a single 2D image in under one second on a standard GPU. The open‑source model and paper were highlighted in new coverage on December 20, 2025, following earlier technical disclosures this week.

About this summary

This article aggregates reporting from 5 news sources. The TL;DR is AI-generated from original reporting. Race to AGI's analysis provides editorial context on implications for AGI development.

Race to AGI Analysis

SHARP matters because it pushes “world models” from lab curiosity closer to commodity tooling. Apple’s research shows a single image can be lifted into a metrically accurate 3D representation in under a second on a standard GPU, using Gaussian splatting and a single feed‑forward pass, while beating prior methods by 25–34% on perceptual similarity benchmarks and cutting synthesis time by three orders of magnitude.([ithome.com](https://www.ithome.com/0/905/857.htm?utm_source=openai)) That is a big leap in both capability and efficiency for 3D perception.

In the AGI race, such models are critical infrastructure for agents that need to reason about the physical world. High‑fidelity, fast 3D reconstructions are the backbone for robotics simulation, AR/VR, autonomous navigation and embodied assistants. Open‑sourcing SHARP lowers the barrier for startups and labs to build spatially aware systems without having Apple‑scale internal tooling.([9to5mac.com](https://9to5mac.com/2025/12/17/apple-sharp-ai-model-turns-2d-photos-into-3d-views/?utm_source=openai)) It also signals that major incumbents beyond the usual LLM giants are quietly stockpiling advanced perception tech, which can later be fused with language models and planning layers.

Competitive dynamics will likely push others—Google, Meta, Nvidia, Chinese labs—to match or exceed SHARP’s performance. As 3D scene understanding becomes cheap and ubiquitous, expect a wave of agentic applications that treat the real world, not just text, as their native substrate.

Who Should Care

Companies Mentioned

Related News

Firefox promises global AI kill switch and strict opt‑in for browser features

Today

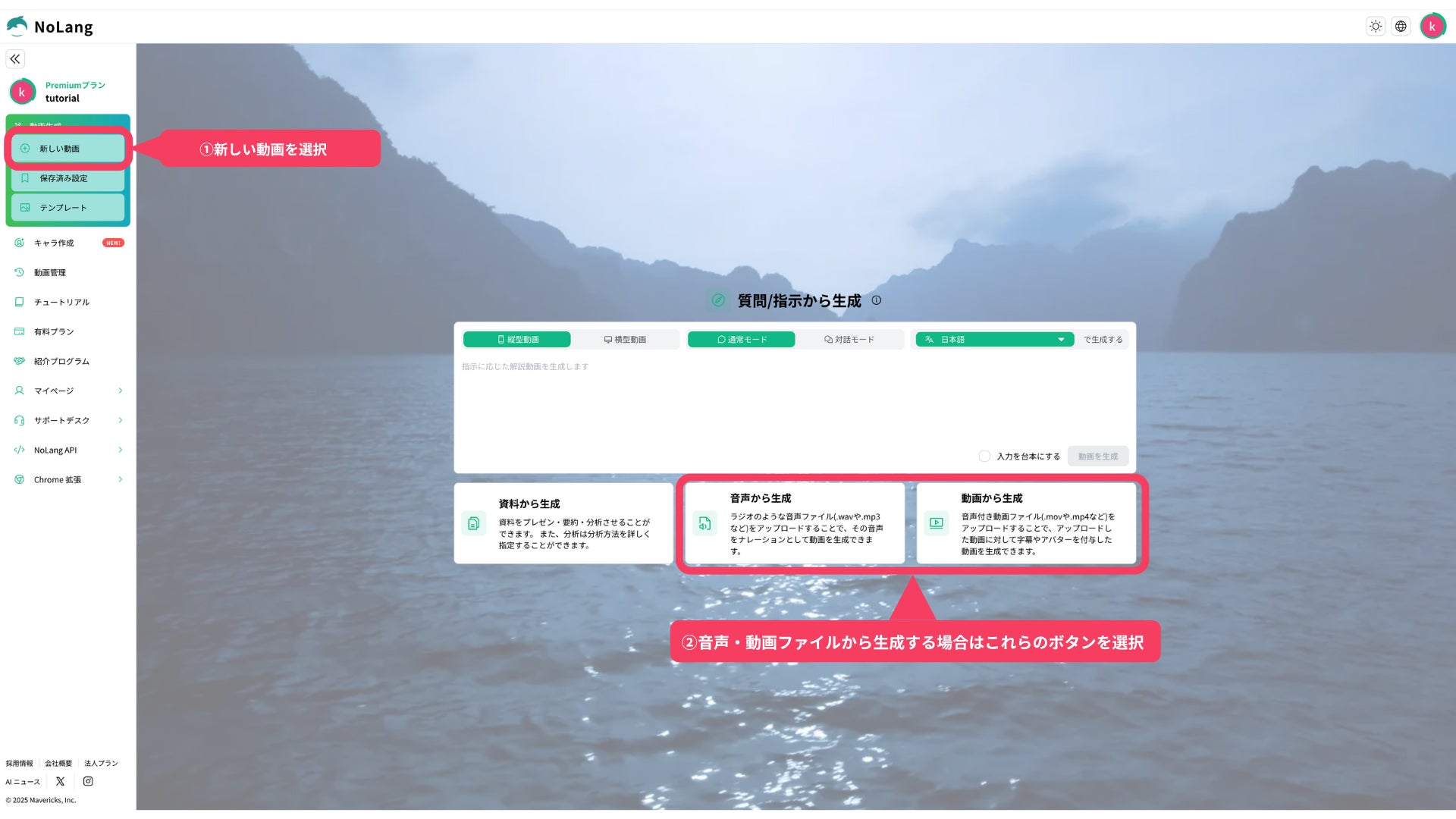

NoLang video AI adds instant 18-language subtitle generation

Today

Bairong unveils Results Cloud AI agent platform and RaaS model

Today