Kakao releases Kanana-2 open-source LLM for agentic AI

TL;DR

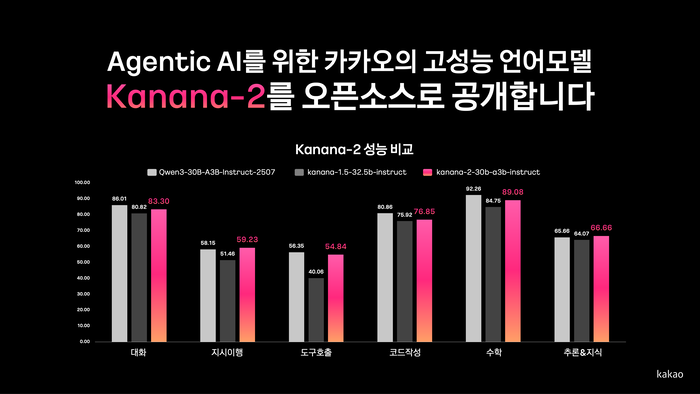

On December 19, 2025, Kakao released its next-generation language model Kanana‑2 as open source on Hugging Face, including Base, Instruct and Thinking variants. The company says Kanana‑2 sharply improves tool-calling, multi‑turn reasoning and efficiency for building agentic AI systems.

About this summary

This article aggregates reporting from 2 news sources. The TL;DR is AI-generated from original reporting. Race to AGI's analysis provides editorial context on implications for AGI development.

Race to AGI Analysis

Kanana‑2 is a notable move in the open‑weight ecosystem because it targets agentic workloads, not just chat benchmarks. Kakao is explicitly optimizing for multi‑turn tool calling, instruction following, and a dedicated "Thinking" variant, and it’s doing so with modern efficiency tricks like MLA attention and mixture‑of‑experts to keep inference costs manageable. For a Korean platform company with deep consumer reach, releasing this stack as open source is both a hedge against closed Western models and a way to galvanize a regional developer community.

In the bigger picture, Kanana‑2 adds to a growing wave of non‑US open‑weight models that are “good enough” for many agentic applications, especially when localized to language and domain. That undercuts the assumption that frontier capabilities will only be accessible via closed APIs from a handful of US labs. For the race to AGI, these regional open stacks don’t immediately change the frontier, but they create a much denser experimentation layer for agents, tools and vertical copilots. Over time, the diversity of these ecosystems—Qwen in China, Kanana in Korea, others in Europe and India—may matter as much as any single flagship model in shaping how quickly agentic systems become ubiquitous.