Generative AI reshapes China’s academic rules on plagiarism

Summary

A long-form essay syndicated by China Science Daily and carried on Sina argues that generative AI is fundamentally rewriting what plagiarism means in academic life. The author contends that traditional rules—focused on copying identifiable text or ideas—break down when AI-generated prose is a statistical remix of vast, diffuse sources, and when AI quietly supports everything from structure to wording. Instead, the piece suggests that the real ethical fault line is shifting from “improper sourcing” to “false academic subjecthood”: whether a named author genuinely understands and can defend the work attributed to them. That shift, the article argues, will push universities and journals away from policing writing processes and AI usage per se and toward demanding demonstrable originality, reproducibility and accountable authorship via tougher defenses, more oral examinations and deeper data scrutiny. It’s a nuanced counter to AI panic that effectively says: using AI isn’t the scandal—pretending AI’s work reflects your own scientific competence is.

Related News

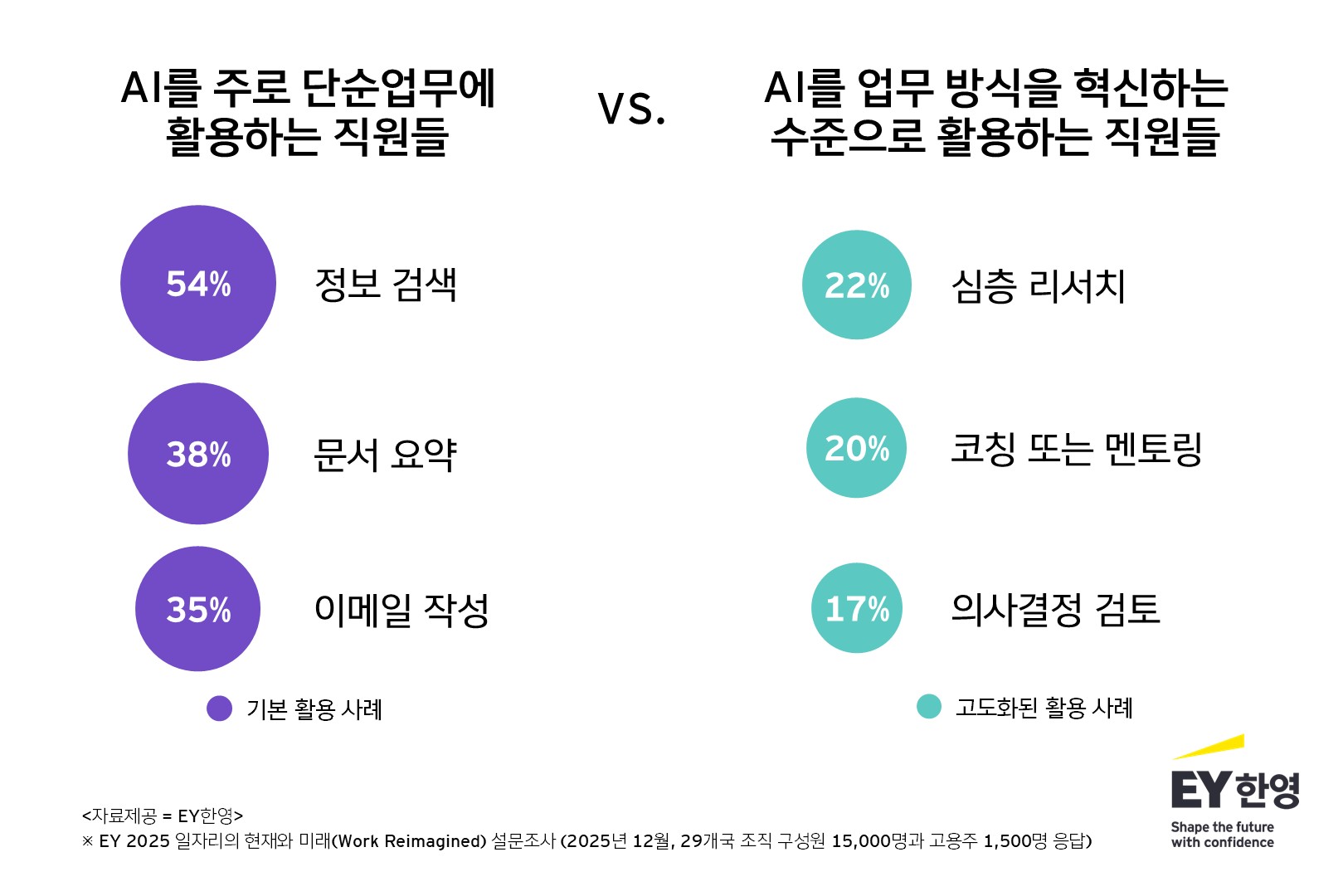

EY Korea survey reveals AI productivity gains hinge on talent strategy

Today

Machida City wins generative AI award for public service platform

Today